Publication

All publications is ordered by reversed chronological order. Anyone can find latest publication in GoogleScholar.

2025

- T-IM

DUAL-LIO: Dual-Inertia aided Lightweight Legged Odometry Using Body ConstraintsXinyi Wang, Yinchuan Wang, Junchen Chi, Yingying Wang, Shiyu Bai, Rui Song, and Chaoqun WangIEEE Transactions on Instrumentation and Measurement, Sep 2025

DUAL-LIO: Dual-Inertia aided Lightweight Legged Odometry Using Body ConstraintsXinyi Wang, Yinchuan Wang, Junchen Chi, Yingying Wang, Shiyu Bai, Rui Song, and Chaoqun WangIEEE Transactions on Instrumentation and Measurement, Sep 2025Low-cost and computationally efficient odometry is essential for enabling robots with limited payload capacity to perform various tasks. Despite many attempts to achieve accurate localization through multi-sensor fusion or inertialonly methods, achieving a satisfactory balance between odometry accuracy, cost, and computational efficiency remains a significant challenge. In this paper, we propose a low-cost, lightweight, and accurate odometry for legged robots, called Dual Leg Inertial Measurement Unit (IMU) Odometry (DUAL-LIO), which utilizes data from two IMUs mounted on the diagonal legs of the robot. Based on these measurements, the system estimates the attitude, velocity, and position. To further mitigate integration drift, we introduce gait-specific constraints, including zero-velocity constraint and stride constraint derived from the gait patterns. A continuous zero-velocity update (C-ZUPT) method is proposed to seamlessly correct the system throughout the entire process. Furthermore, the stride constraint exploits the maximal leg swing amplitude to improve the accuracy of state estimation. The dual-IMU configuration leverages the inter-IMU distance from their diagonal placement on opposing legs to compensate for odometry errors through spatial constraint. Experimental results validate the effectiveness of our method, demonstrating not only a 46% accuracy improvement but also a significant reduction in computational time compared to state of the arts in the public dataset. Our code is available at: https://github.com/Demixinyi/DUAL-LIO.

@article{wang2025receding, title = {DUAL-LIO: Dual-Inertia aided Lightweight Legged Odometry Using Body Constraints}, author = {Wang, Xinyi and Wang, Yinchuan and Chi, Junchen and Wang, Yingying and Bai, Shiyu and Song, Rui and Wang, Chaoqun}, journal = {IEEE Transactions on Instrumentation and Measurement}, year = {2025}, month = sep, doi = {10.1109/TIM.2025.3614810}, dimensions = {false}, google_scholar_id = {M3ejUd6NZC8C}, } - T-Mech

Receding-Horizon Path Planning for Risk-Free Mapless Navigation in Uneven TerrainsYinchuan Wang, Xiang Zhang, Yuhan Wang, Chaoqun Wang, Rui Song, and Yibin LiIEEE/ASME Transactions on Mechatronics, Aug 2025

Receding-Horizon Path Planning for Risk-Free Mapless Navigation in Uneven TerrainsYinchuan Wang, Xiang Zhang, Yuhan Wang, Chaoqun Wang, Rui Song, and Yibin LiIEEE/ASME Transactions on Mechatronics, Aug 2025It is an intractable question for the autonomous robot to navigate in uneven terrain, especially without a prior map of the environment. In this situation, the robot must judge where to go and maintain self-safety together, which is challenging for path planning and decision-making module of the robot. To address this problem, this study presents an integrated navigation framework for leading the robot to traverse unknown and uneven terrain and reach a designated goal. We incrementally construct a risk-aware tree during the mapless navigation. The tree nodes are growing on the ground surface while the edges reflect the risk and safety of traversing this edge. The root of the tree moves following robot position for rapid path finding. In addition, we design a receding-horizon strategy for determining where to go in the unknown environment. The valuable tree nodes located at the boundary of the local grid map are filtered to select a series of subgoals. These subgoals guide the robot to reach the final target step by step, and the paths to the subgoals are always optimized by rewiring the risk-aware tree for efficiency and safety. We evaluate our framework in both simulation and real-world experiments. The experimental results show that our approach has higher efficiency and efficacy compared with state-of-the-art methods.

@article{wang2025recedinh, title = {Receding-Horizon Path Planning for Risk-Free Mapless Navigation in Uneven Terrains}, author = {Wang, Yinchuan and Zhang, Xiang and Wang, Yuhan and Wang, Chaoqun and Song, Rui and Li, Yibin}, journal = {IEEE/ASME Transactions on Mechatronics}, year = {2025}, month = aug, doi = {10.1109/TMECH.2025.3595395}, dimensions = {false}, google_scholar_id = {WF5omc3nYNoC}, } - IROS2025

Capsizing-Guided Trajectory Optimization for Autonomous Navigation with Rough TerrainWei Zhang, Yinchuan Wang, Wangtao Lu, Pengyu Zhang, Xiang Zhang, Yue Wang, and Chaoqun Wang2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), China Hangzhou, Aug 2025

Capsizing-Guided Trajectory Optimization for Autonomous Navigation with Rough TerrainWei Zhang, Yinchuan Wang, Wangtao Lu, Pengyu Zhang, Xiang Zhang, Yue Wang, and Chaoqun Wang2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), China Hangzhou, Aug 2025It is a challenging task for ground robots to autonomously navigate in harsh environments due to the presence of non-trivial obstacles and uneven terrain. This requires trajectory planning that balances safety and efficiency. The primary challenge is to generate a feasible trajectory that prevents robot from tip-over while ensuring effective navigation. In this paper, we propose a capsizing-aware trajectory planner (CAP) to achieve trajectory planning on the uneven terrain. The tip-over stability of the robot on rough terrain is analyzed. Based on the tip-over stability, we define the traversable orientation, which indicates the safe range of robot orientations. This orientation is then incorporated into a capsizing-safety constraint for trajectory optimization. We employ a graph-based solver to compute a robust and feasible trajectory while adhering to the capsizing-safety constraint. Extensive simulation and real-world experiments validate the effectiveness and robustness of the proposed method. The results demonstrate that CAP outperforms existing state-of-the-art approaches, providing enhanced navigation performance on uneven terrains.

@article{wang2024history, title = {Capsizing-Guided Trajectory Optimization for Autonomous Navigation with Rough Terrain}, author = {Zhang, Wei and Wang, Yinchuan and Lu, Wangtao and Zhang, Pengyu and Zhang, Xiang and Wang, Yue and Wang, Chaoqun}, journal = {2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, location = {China Hangzhou}, year = {2025}, dimensions = {false}, google_scholar_id = {ufrVoPGSRksC}, } - TCDS

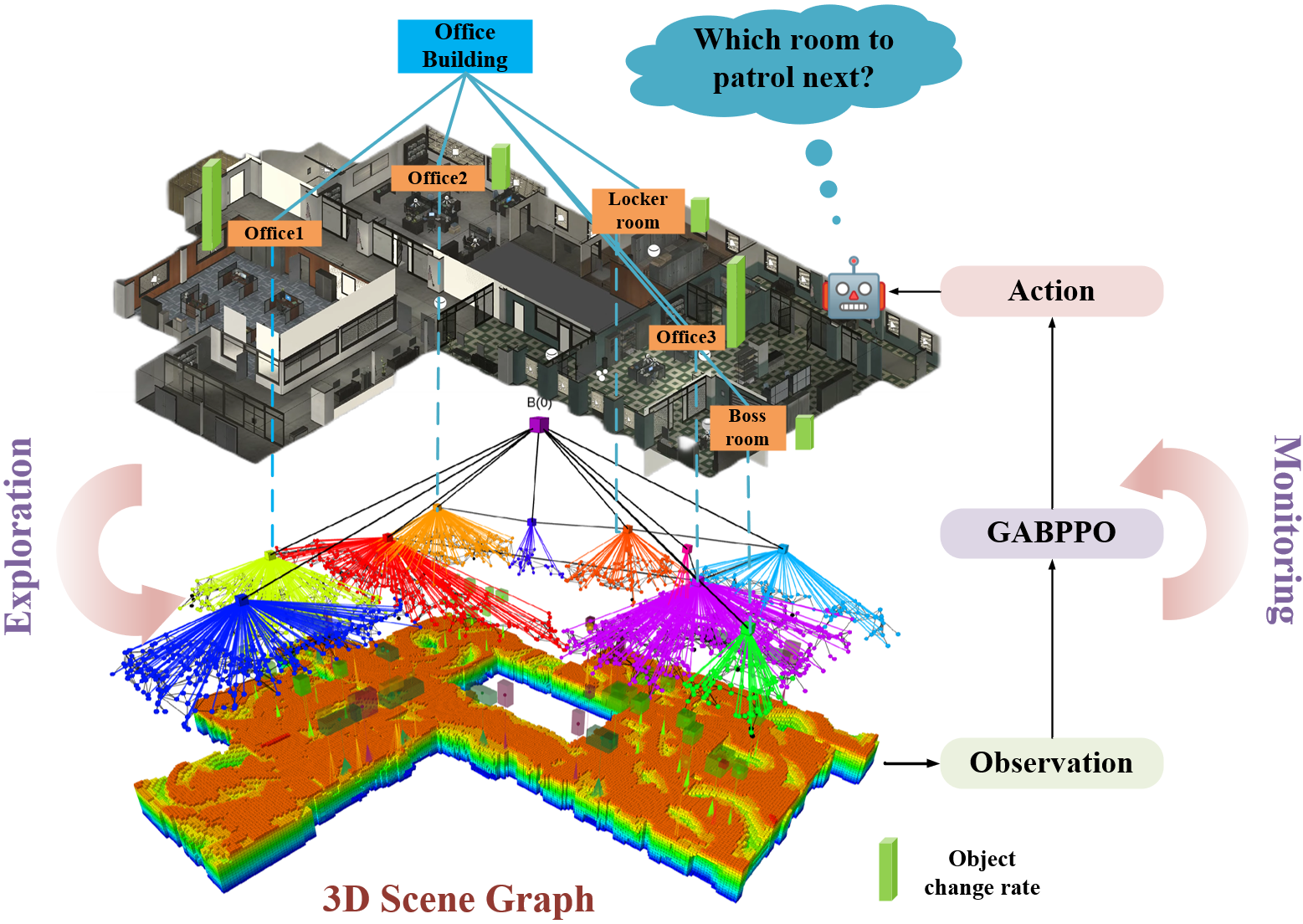

SELM: From Efficient Autonomous Exploration to Long-term Monitoring in Semantic LevelFang Lang, Yongsen Qin, Yinchuan Wang, Jin Liu, Chaoqun Wang, Wei Song, Qiuguo Zhu, and Rui SongIEEE Transactions on Cognitive and Developmental Systems, Jan 2025

SELM: From Efficient Autonomous Exploration to Long-term Monitoring in Semantic LevelFang Lang, Yongsen Qin, Yinchuan Wang, Jin Liu, Chaoqun Wang, Wei Song, Qiuguo Zhu, and Rui SongIEEE Transactions on Cognitive and Developmental Systems, Jan 2025Maintaining up-to-date environmental models from initial deployment through long-term autonomy in service is critical for applications like navigation and task planning. To address the challenges of persistent monitoring in unknown environments, we introduce a Two-Stage monitoring strategy, termed the Semantic-level autonomous Exploration and Long-term environment Monitoring (SELM) framework. In the first stage, we introduce a novel semantic exploration method to adapt to new environments quickly. Leveraging the semantic information within the incrementally constructed 3D Scene Graph (3DSG), we combine the Next-Best-View (NBV) selection with room semantics, introducing a more efficient and comprehensive approach for multi-room indoor environment exploration. In addition, the exploration provides patrol routes, the room distance-connectivity graph, and complete environment initial states for subsequential monitoring. The monitoring stage aims to persistently patrol to update the world model in the presence of dynamic changes, including changes in objects’ positions. We formulate the long-term monitoring problem as the Partially Observable Markov Decision Process (POMDP) to cope with the environmental uncertainty. To solve the POMDP, we propose the Graph Attention Bidirectional long short-term memory Proximal Policy Optimization (GABPPO) algorithm for the optimal patrol strategy. The feasibility and effectiveness of the proposed SELM framework are verified through extensive experiments.

@article{lang2025selm, title = {SELM: From Efficient Autonomous Exploration to Long-term Monitoring in Semantic Level}, author = {Lang, Fang and Qin, Yongsen and Wang, Yinchuan and Liu, Jin and Wang, Chaoqun and Song, Wei and Zhu, Qiuguo and Song, Rui}, journal = {IEEE Transactions on Cognitive and Developmental Systems}, year = {2025}, month = jan, doi = {10.1109/TCDS.2025.3531367}, dimensions = {false}, google_scholar_id = {eQOLeE2rZwMC}, } - J-FR

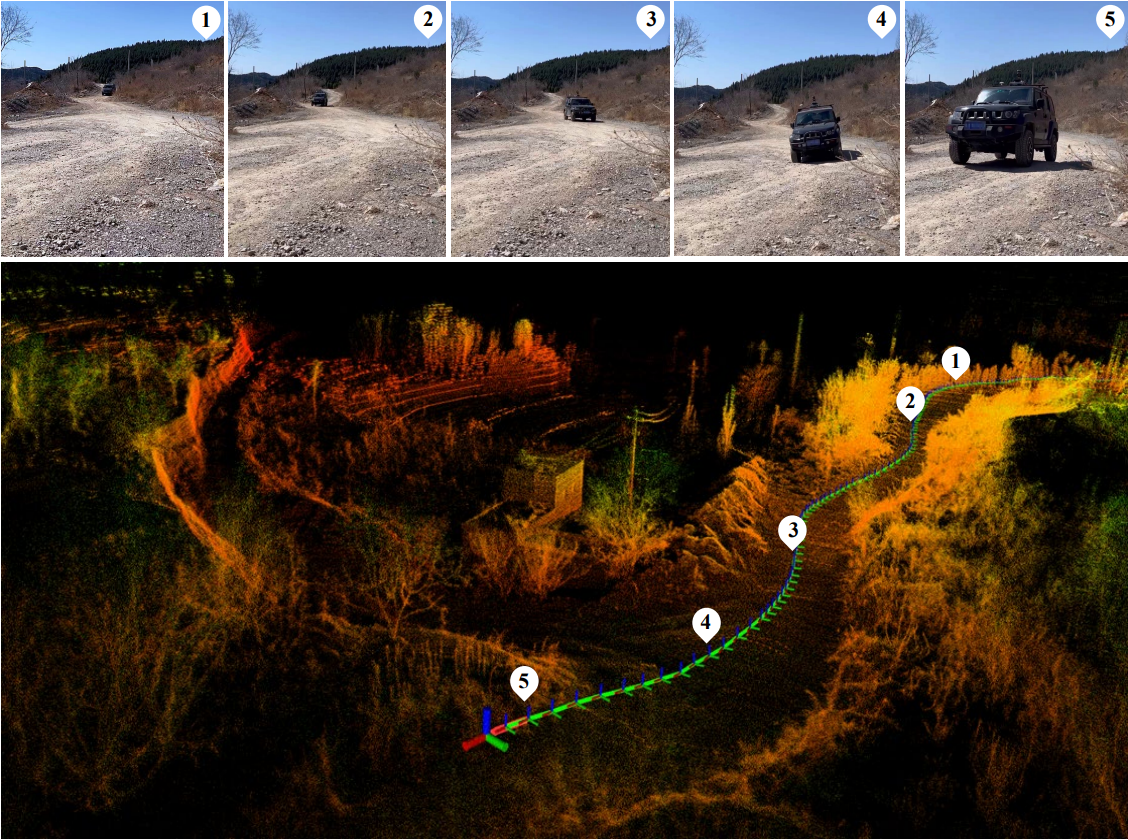

ROLO-SLAM: Rotation-Optimized LiDAR-Only SLAM in Uneven Terrain with Ground VehicleYinchuan Wang, Bin Ren, Xiang Zhang, Pengyu Wang, Chaoqun Wang, Rui Song, Yibin Li, and Meng Max Q.-H.Journal of Field Robotics, Jan 2025

ROLO-SLAM: Rotation-Optimized LiDAR-Only SLAM in Uneven Terrain with Ground VehicleYinchuan Wang, Bin Ren, Xiang Zhang, Pengyu Wang, Chaoqun Wang, Rui Song, Yibin Li, and Meng Max Q.-H.Journal of Field Robotics, Jan 2025LiDAR-based SLAM is recognized as one effective method to offer localization guidance in rough environments. However, off-the-shelf LiDAR-based SLAM methods suffer from significant pose estimation drifts, particularly components relevant to the vertical direction, when passing to uneven terrains. This deficiency typically leads to a conspicuously distorted global map. In this article, a LiDAR-based SLAM method is presented to improve the accuracy of pose estimations for ground vehicles in rough terrains, which is termed Rotation-Optimized LiDAR-Only (ROLO) SLAM. The method exploits a forward location prediction to coarsely eliminate the location difference of consecutive scans, thereby enabling separate and accurate determination of the location and orientation at the front-end. Furthermore, we adopt a parallel-capable spatial voxelization for correspondence-matching. We develop a spherical alignment-guided rotation registration within each voxel to estimate the rotation of vehicle. By incorporating geometric alignment, we introduce the motion constraint into the optimization formulation to enhance the rapid and effective estimation of LiDAR’s translation. Subsequently, we extract several keyframes to construct the submap and exploit an alignment from the current scan to the submap for precise pose estimation. Meanwhile, a global-scale factor graph is established to aid in the reduction of cumulative errors. In various scenes, diverse experiments have been conducted to evaluate our method. The results demonstrate that ROLO-SLAM excels in pose estimation of ground vehicles and outperforms existing state-of-the-art LiDAR SLAM frameworks.

@article{wang2025rolo, title = {ROLO-SLAM: Rotation-Optimized LiDAR-Only SLAM in Uneven Terrain with Ground Vehicle}, author = {Wang, Yinchuan and Ren, Bin and Zhang, Xiang and Wang, Pengyu and Wang, Chaoqun and Song, Rui and Li, Yibin and Q.-H., Meng Max}, journal = {Journal of Field Robotics}, year = {2025}, month = jan, doi = {10.1002/rob.22505}, dimensions = {false}, google_scholar_id = {YsMSGLbcyi4C}, }

2024

- IEEE T-IV

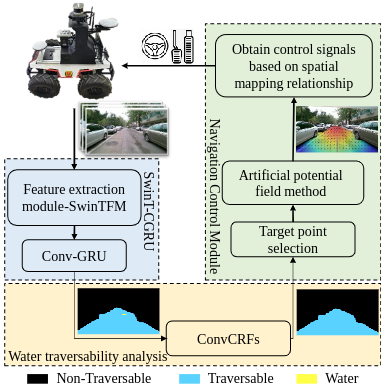

Transformer-based Traversability Analysis for Autonomous Navigation in Outdoor Environments with Water HazardMingrui Xu, Yinchuan Wang, Xiang Zhang, Donghui Mao, Chaoqun Wang, Rui Song, and Yibin LiIEEE Transactions on Intelligent Vehicles, Jun 2024

Transformer-based Traversability Analysis for Autonomous Navigation in Outdoor Environments with Water HazardMingrui Xu, Yinchuan Wang, Xiang Zhang, Donghui Mao, Chaoqun Wang, Rui Song, and Yibin LiIEEE Transactions on Intelligent Vehicles, Jun 2024Traversability analysis is of vital importance for autonomous vehicle navigation in outdoor environments. This paper focuses on the navigation algorithms in outdoor environments with water hazards, where the water areas can neither simply be neglected nor be deemed as non-traversable for safe and efficient navigation. To this end, we present a tightly-integrated navigation algorithms framework, which includes an environmental traversability analysis model and a control signal acquisition model. The traversability analysis model includes the SwinT-CGRU semantic segmentation model and the ConvCRFs water analysis model proposed in this paper. SwinT-CGRU can efficiently extract the feature from the input successive images, providing initial analysis of environmental traversability. To further analyze the water region, we employ a ConvCRFs, which determines the water traversability in an effective way. The results of the water traversability analysis are convincing for reliable vehicle navigation in outdoor scenarios. Based on the traversability analysis results, we propose a path planning approach by adapting the artificial potential field method to the image space. The control signal for steering and driving the vehicle can be acquired through an established mapping from the image space to the vehicle space. Experiments demonstrate that our segmentation model improves mIoU by 2.09-18.21% on the RELLIS-3D dataset. Furthermore, our navigation algorithm exhibits real-time performance and stability, ensuring the safety of vehicles in outdoor environments.

@article{xu2024transformer, title = {Transformer-based Traversability Analysis for Autonomous Navigation in Outdoor Environments with Water Hazard}, author = {Xu, Mingrui and Wang, Yinchuan and Zhang, Xiang and Mao, Donghui and Wang, Chaoqun and Song, Rui and Li, Yibin}, journal = {IEEE Transactions on Intelligent Vehicles}, year = {2024}, month = jun, doi = {10.1109/TIV.2024.3419846}, dimensions = {false}, google_scholar_id = {Tyk-4Ss8FVUC}, } - ICRA2024

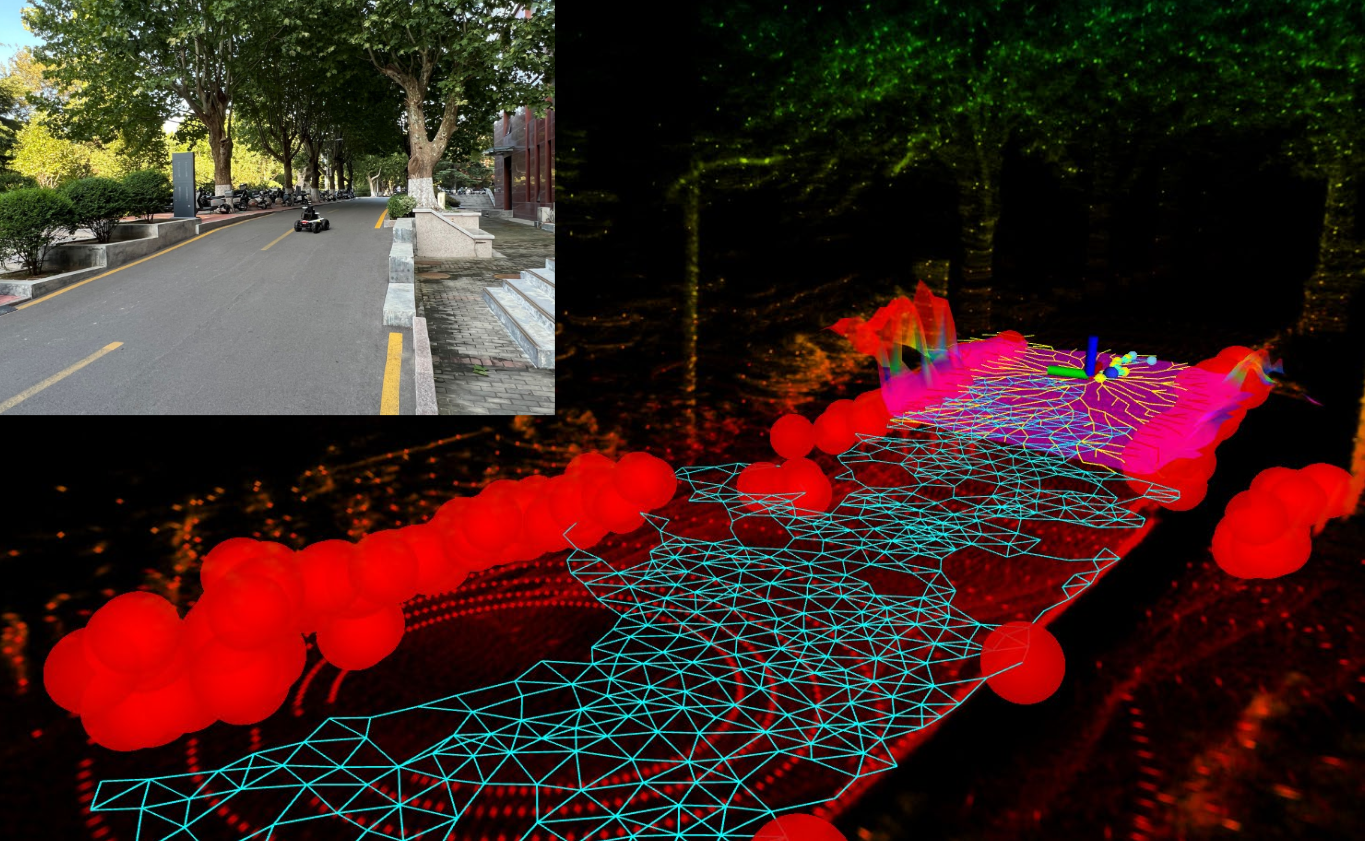

History-Aware Planning for Risk-free Autonomous Navigation on Unknown Uneven TerrainYinchuan Wang, Nianfei Du, Yongsen Qin, Xiang Zhang, Rui Song, and Chaoqun Wang2024 IEEE International Conference on Robotics and Automation (ICRA 2024), Japan Yokohama, May 2024

History-Aware Planning for Risk-free Autonomous Navigation on Unknown Uneven TerrainYinchuan Wang, Nianfei Du, Yongsen Qin, Xiang Zhang, Rui Song, and Chaoqun Wang2024 IEEE International Conference on Robotics and Automation (ICRA 2024), Japan Yokohama, May 2024It is challenging for the mobile robot to achieve autonomous and mapless navigation in the unknown environment with uneven terrain. In this study, we present a layered and systematic pipeline. At the local level, we maintain a tree structure that is dynamically extended with the navigation. This structure unifies the planning with the terrain identification. Besides, it contributes to explicitly identifying the hazardous areas on uneven terrain. In particular, certain nodes of the tree are consistently kept to form a sparse graph at the global level, which records the history of the exploration. A series of subgoals that can be obtained in the tree and the graph are utilized for leading the navigation. To determine a subgoal, we develop an evaluation method whose input elements can be efficiently obtained on the layered structure. We conduct both simulation and real-world experiments to evaluate the developed method and its key modules. The experimental results demonstrate the effectiveness and efficiency of our method. The robot can travel through the unknown uneven region safely and reach the target rapidly without a preconstructed map.

@article{wang2024historz, title = {History-Aware Planning for Risk-free Autonomous Navigation on Unknown Uneven Terrain}, author = {Wang, Yinchuan and Du, Nianfei and Qin, Yongsen and Zhang, Xiang and Song, Rui and Wang, Chaoqun}, journal = {2024 IEEE International Conference on Robotics and Automation (ICRA 2024)}, location = {Japan Yokohama}, pages = {777--780}, year = {2024}, month = may, doi = {10.1109/ICRA57147.2024.10610488}, dimensions = {false}, google_scholar_id = {zYLM7Y9cAGgC}, }

2023

- CCC2023A Comfortable Interaction Strategy for the Visually Impaired Using Quadruped Guidance RobotNianfei Du, Yinchuan Wang, Zhengguo Zhu, Yongsen Qin, Guoteng Zhang, and Chaoqun Wang2023 42nd Chinese Control Conference (CCC), China Tianjin, Jul 2023

A guidance robot that can guide its user to the destination smoothly and comfortably without rigorous training is expected to bring more convenience to the visually impaired. Although guidance robots have evolved considerably, little attention has been paid to the importance of human-robot interaction in the guidance process which will bring an unpleasant experience for the user when the amplitude of the interaction force increases. In this paper, we propose an interaction strategy for the visually impaired using quadruped guidance robot based on compliance control to make the user more comfortable to be guided. The user can track robot’s trajectory by perceiving the variation of traction force or manipulate the movement of the robot by applying force to the robot using proposed strategy. We also build a quadruped guidance robot system with an autonomous navigation algorithm. The robot can plan a safe path that ensures the user does not collide with obstacles when following the robot. Then the robot continuously interacts with its user while following the planned path to the goal to provide a comfortable experience. Experiments show that the interaction strategy we deployed in our quadruped guidance robot reduces traction force during guidance and improves the user experience.

- BIROBAutonomous battery-changing system for UAV’s lifelong flightJiyang Chen, Wenxi Li, Yingting Sha, Yinchuan Wang, Zhenqiang Zhang, Shikuan Li, Chaoqun Wang, and Sile MaBiomimetic Intelligence and Robotics, Jun 2023

Unmanned aerial vehicles (UAVs) lifelong flight is essential to accomplish various tasks, e.g., aerial patrol, aerial rescue, etc. However, traditional UAVs have limited power to sustain their flight and need skilled operators manually control their charging process. Manufacturers and users are eagerly seeking a reliable autonomous battery-changing solution. To address this need, we propose and design an autonomous battery-changing system for UAVs using the theory of inventive problem solving (TRIZ) and user-centered design (UCD) methods. For practical application, we employ UCD to thoroughly analyze user requirements, identify multiple pairs of technical contradictions, and solve these inconsistencies using TRIZ theory. Furthermore, we design an autonomous battery-changing hardware system that meets user requirements and realizes the battery change process after the automatic landing of UAVs. Finally, we conduct experiments to validate our system’s effectiveness. The experimental results show that our battery-changing system can implement the autonomous battery-changing task, and our system has high efficiency and robustness in the real environment.

2022

- 2022IROS

Low-drift LiDAR-only Odometry and Mapping for UGVs in Environments with Non-level RoadsXiangyu Chen, Yinchuan Wang, Chaoqun Wang, Rui Song, and Yibin Li2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Japan Kyoto, Oct 2022

Low-drift LiDAR-only Odometry and Mapping for UGVs in Environments with Non-level RoadsXiangyu Chen, Yinchuan Wang, Chaoqun Wang, Rui Song, and Yibin Li2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Japan Kyoto, Oct 2022This study focuses on localization and mapping for UGVs when they are deployed in environments with non-level roads. In these scenarios, the vehicles need to travel through flat but not necessarily level grounds, i.e., ascent or descent, which may cause drifts of the robot pose and distortion of the map. We develop a low-drift LiDAR odometry and mapping approach for the UGV with LiDAR as the only exteroceptive sensor. A factor-graph based pose optimization method is developed with a specifically designed factor named slope factor. This factor includes the slope information that is estimated from a real-time LiDAR data stream. The slope information is also used to enhance the loop-closure detection procedure. Moreover, an incremental pitch estimation mechanism is designed to achieve further pose estimation refinement. We demonstrate the effectiveness of the developed framework in real-world environments. The odometry drift is lower and the map is more precise than experiments with the state-of-the-arts. Notably, on the Kitti dataset, our method also exhibits convincing performance, demonstrating its strength in more general application scenarios.

@article{chen2022low, title = {Low-drift LiDAR-only Odometry and Mapping for UGVs in Environments with Non-level Roads}, author = {Chen, Xiangyu and Wang, Yinchuan and Wang, Chaoqun and Song, Rui and Li, Yibin}, journal = {2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)}, location = {Japan Kyoto}, pages = {13174--13180}, year = {2022}, organization = {IEEE}, month = oct, doi = {10.1109/IROS47612.2022.9982264}, dimensions = {false}, google_scholar_id = {u5HHmVD_uO8C}, } - ROBIO2022

Information Map Prediction based on Learning Network for Reinforced Autonomous ExplorationYinchuan Wang, Mingrui Xu, Xiangyu Chen, Xiang Zhang, Chaoqun Wang, and Rui Song2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), China Xishuangbanna, Dec 2022

Information Map Prediction based on Learning Network for Reinforced Autonomous ExplorationYinchuan Wang, Mingrui Xu, Xiangyu Chen, Xiang Zhang, Chaoqun Wang, and Rui Song2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), China Xishuangbanna, Dec 2022Autonomous exploration is the prerequisite for reliable robot navigation in unknown environments via providing the necessary information. The goal is to build a precise environment map while at the same time locating the robot robustly. To achieve this goal, this paper develops an information map that jointly provides the information of mapping and localization uncertainty. It consists of a localization uncertainty map and a model uncertainty map. The former evaluates the landmarks around the robot and the latter indicates the map completeness based on information theory. The information map serves as the input for making better decisions of where-to-go to achieve higher localization and mapping performance. To improve the efficiency of information map prediction, we develop a learning-based map prediction framework, where a dataset is established autonomously. The effectiveness and efficiency of the developed framework are verified through experiments in simulation environments.

@article{wang2022information, title = {Information Map Prediction based on Learning Network for Reinforced Autonomous Exploration}, author = {Wang, Yinchuan and Xu, Mingrui and Chen, Xiangyu and Zhang, Xiang and Wang, Chaoqun and Song, Rui}, journal = {2022 IEEE International Conference on Robotics and Biomimetics (ROBIO)}, location = {China Xishuangbanna}, pages = {1982--1988}, year = {2022}, organization = {IEEE}, month = dec, doi = {10.1109/ROBIO55434.2022.10011800}, dimensions = {false}, google_scholar_id = {u-x6o8ySG0sC}, }